Understanding Model Context Protocol (MCP) - Part 1

From API Chaos to Universal Standards: Understanding the MCP Foundation That Powers Modern AI Integration.

The Birth of a New Standard: How MCP Came to Be

Large Language Models (LLMs) like ChatGPT, Claude, and Gemini can write code, debug complex problems, and explain technical concepts with remarkable fluency. Yet despite their brilliance, they’ve been operating in isolation—powerful minds trapped in a room with no doors or windows.

Picture this: you have an AI assistant that can write flawless TypeScript, suggest perfect Angular component architectures, and identify bugs in milliseconds. But it can’t see your actual project files, can’t access your database, can’t read your API documentation, and has no idea what tools you’re using. It’s like hiring a world-class architect who has to design your house without ever seeing the plot of land, checking local building codes, or knowing what materials are available.

This has been the fundamental limitation of AI development tools—until now.

The Genesis: Anthropic’s Vision

In November 2024, Anthropic—the AI safety company founded by former OpenAI researchers and creators of Claude—unveiled something that would change the landscape of AI integration: the Model Context Protocol (MCP).

The team at Anthropic had been grappling with a persistent problem. As they worked on making Claude more useful for real-world tasks, they kept running into the same wall: every time they wanted to connect Claude to a new tool, service, or data source, they had to build a custom integration from scratch. Database access required one approach. File system operations needed another. API integrations each demanded unique implementations.

It was the API chaos problem we explored earlier, but at a massive scale.

The Open Standard Revolution

Rather than building yet another proprietary solution, Anthropic made a bold decision: they would create an open, universal protocol that any AI system could use to connect with any tool or service. On November 25, 2024, they released MCP as an open-source specification, complete with SDKs in Python and TypeScript.

The core idea was elegant: instead of building point-to-point integrations between every AI model and every service, create a standardized “language” that both sides could speak. Think of it as establishing USB-C for AI connectivity—one protocol to rule them all.

What made MCP truly revolutionary wasn’t just the technical specification. It was the philosophy behind it:

Open and Free: Anyone could implement it, no licensing fees, no vendor lock-in

Bidirectional: Both AI systems and services could initiate communication

Secure by Design: Built-in authorization and sandboxing capabilities

Simple Yet Powerful: Easy enough for individual developers to implement, robust enough for enterprise systems

The Unprecedented Adoption

Here’s where the story gets really interesting. In the tech world, standards battles typically drag on for years. Companies fight over competing specifications, each pushing their own solution. But something different happened with MCP.

By March 2025, just four months after its introduction, OpenAI—Anthropic’s biggest competitor—announced they were adopting MCP for GPT integrations. Let that sink in: a company competing directly with Anthropic chose to embrace their open standard rather than build their own.

April 2025 brought another bombshell: Google DeepMind integrated MCP support into their AI offerings. Microsoft followed in May 2025, incorporating MCP into Azure AI services and announcing plans to support it across their development tools ecosystem.

When fierce competitors this quickly agree on a standard, you’re witnessing something rare in tech history. It’s reminiscent of how HTTP became the universal protocol for the web, or how REST APIs became the de facto standard for web services.

From Concept to Ecosystem

Today, less than a year after its introduction, MCP has evolved from a novel idea into a thriving ecosystem. Development tools like Cursor, Windsurf, and Zed have built-in MCP support. Major SaaS companies are releasing official MCP servers. The community has created hundreds of integrations.

What started as Anthropic’s solution to their own integration challenges has become the foundation for how AI systems interact with the digital world.

And that’s exactly what we’re going to explore in this article: understanding the principles behind MCP and learning how to build our own MCP server using Angular, joining this revolution in AI integration.

The Fundamental Problem: AI That Can Only "Talk"

When you ask ChatGPT to "plan me a week-long holiday in Costa Rica," it can tell you exactly what to do, suggest amazing destinations, even help you create detailed itineraries—but it cannot actually open a browser, navigate to travel sites, check real-time availability, or complete bookings. LLMs are fundamentally text generators, not action-takers. This is essentially the limitation that Large Language Models (LLMs) like GPT face today.

Enter AI Agents: The Hands and Feet of AI

This is where AI agents come into play. Think of an AI agent as an intelligent assistant that combines the reasoning power of an LLM with the ability actually to do things. It's like giving that brilliant person in the room a set of tools, internet access, and the ability to interact with the outside world.

An AI agent follows a simple but powerful pattern:

Receive a request from the user

Think about it using an LLM ("What kind of holiday experience does the user want?")

Take actions by calling external services and APIs

Think again about the results ("Do these options match their preferences? Do I need more information?")

Repeat until the task is complete

For example, when you say "plan me an adventure holiday in Costa Rica," an AI agent might:

Ask the LLM to extract details (budget, interests, group size, dates)

Call tourism APIs to find available activities and accommodations

Check weather services for seasonal considerations

Ask the LLM to analyze options against your adventure preferences

Present a complete itinerary with bookable options

The API Chaos Problem

Here's where things get complicated. Every travel service has its own API:

Airbnb uses

/v2/listings/searchTripAdvisor uses

/experiences/searchNational Parks Service uses

/parks/activitiesLocal tour operators each have unique endpoints

// The nightmare of integrating multiple travel APIs - each one is different!

class HolidayPlanningAgent {

async findAccommodations(destination, checkin, checkout, guests) {

let results = [];

// Airbnb - uses Bearer token, POST request

const airbnbResponse = await fetch(`https://api.airbnb.com/v2/listings/search`, {

method: 'POST',

headers: { 'Authorization': `Bearer ${this.airbnbToken}` },

body: JSON.stringify({

location: destination, // calls it 'location'

check_in: checkin, // underscore format

adults: guests

})

});

// Returns: { listings: [{ price_per_night, coordinates: {lat, lng} }] }

// Booking.com - uses Basic auth, GET request

const bookingResponse = await fetch(`https://distribution-xml.booking.com/2.4/json/hotelAvailability`, {

method: 'GET',

headers: { 'Authorization': `Basic ${this.bookingAuth}` },

params: {

city: destination, // calls it 'city'

checkin_date: checkin, // different naming

room1: `A,A,${guests}` // completely different format

}

});

// Returns: { result: [{ min_total_price, latitude, longitude }] }

// Hotels.com - uses API key, different date format

const hotelsResponse = await fetch(`https://api.ean.com/ean-services/rs/hotel/v3/list`, {

headers: { 'Authorization': `EAN APIKey=${this.hotelsKey}` },

params: {

destinationString: destination, // yet another name

arrivalDate: checkin.replace(/-/g, '/'), // wants MM/DD/YYYY format

RoomGroup: `(A,${guests})` // parentheses format

}

});

// Returns: { HotelListResponse: { HotelList: { HotelSummary: [{ lowRate }] } } }

return results; // Each API returns completely different data structures

}

async findActivities(destination, type) {

// TripAdvisor

await fetch(`https://api.tripadvisor.com/api/partner/2.0/experiences/search`, {

params: { location: destination, category: this.mapToTripAdvisorCategory(type) }

});

// Returns: { data: [{ experience_id, title, price_from }] }

// GetYourGuide

await fetch(`https://www.getyourguide.com/api/v1/activities`, {

method: 'POST',

body: JSON.stringify({

data: { type: 'activity-search', attributes: { destination } }

})

});

// Returns JSON API format: { data: [{ id, attributes: { title, price: { amount } } }] }

// National Parks

await fetch(`https://developer.nps.gov/api/v1/activities/parks`, {

params: { q: destination } // calls it 'q' instead of 'destination'

});

// Returns: { data: [{ parks: [{ fullName, entranceFees: [{ cost }] }] }] }

}

// Every API needs different category mappings

mapToTripAdvisorCategory(type) {

return { 'adventure': 'outdoor-activities', 'culture': 'cultural-tours' }[type];

}

}

/*

The Problem:

- 6 different authentication methods (Bearer, Basic, API Key)

- 6 different request formats (POST vs GET, different JSON structures)

- 6 different parameter names for the same concept (location vs city vs destinationString vs q)

- 6 different response formats to parse and normalize

- 6 different error handling approaches

- Constant maintenance as each API evolves independently

Result: You spend more time on API integration than actual holiday planning logic!

*/Each returns data in different formats, requires different authentication, and has different parameter names. If you want your AI agent to work with hundreds of accommodation providers, activity companies, restaurants, and attractions, you'd need to write custom integration code for each one.

This is like having a universal remote control that needs a different button layout for every device—it's technically possible but practically unsustainable.

Model Context Protocol: The Universal Translator

Model Context Protocol (MCP) solves this problem by creating a standardized way for AI agents to communicate with external services. Instead of writing custom code for each travel API, you create an MCP server that acts as a translator between the standardized MCP language and the service's specific API.

Think of MCP as creating a universal interface. Instead of your AI agent needing to learn 1,000 different ways to say "find adventure activities," there's now one standard way that works with any MCP-compatible service.

The Three Pillars of MCP

Every MCP server exposes three types of capabilities:

1. Tools - The Actions

Tools are the things your AI agent can do. These are functions that perform actions or retrieve data:

search_accommodations(location, checkin, checkout, guests)find_activities(destination, activity_type, difficulty_level)get_restaurant_recommendations(location, cuisine, budget)check_weather_forecast(destination, dates)

2. Resources - The Context

Resources are static information that helps the AI make better decisions:

Destination guides and local customs

Activity difficulty ratings and requirements

Seasonal travel recommendations

Visa and vaccination requirements

Currency exchange rates and tipping guides

3. Prompts - The Wisdom

Prompts are pre-written instructions that help the AI use the tools correctly:

"When planning adventure trips, always check weather patterns and seasonal accessibility"

"For family holidays, prioritize activities suitable for all age groups mentioned"

"When suggesting accommodations, consider proximity to planned activities"

The Architecture: Client Meets Server

MCP follows a simple client-server model:

MCP Client (your AI agent or IDE):

Discovers what an MCP server can do

Sends requests to perform actions

Receives responses and data

MCP Server (the service provider):

Exposes tools, resources, and prompts

Handles the actual API calls to external services

Returns standardized responses

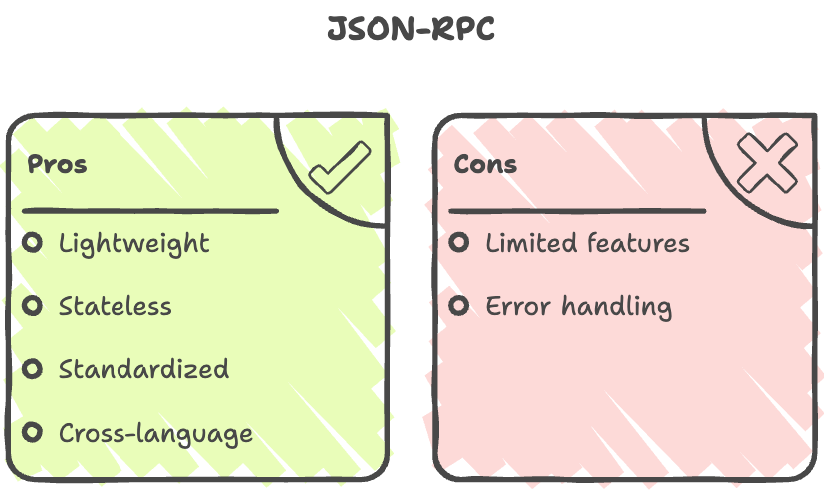

The communication happens through JSON-RPC, a lightweight protocol that's like having a standardized conversation format:

Client: "Can you search for adventure activities?" Server: "Yes, I can do that. Give me destination, activity type, and difficulty level." Client: "Find zip-lining activities in Costa Rica for intermediate level" Server: "Here are 8 zip-lining tours matching your criteria..."

The Communication Protocol: JSON-RPC 2.0

Before diving into the MCP specification itself, we need to understand how MCP clients and servers actually communicate with each other. MCP uses JSON-RPC 2.0 as its communication protocol—think of it as the "language" that clients and servers speak when exchanging information.

What is JSON-RPC?

JSON-RPC (JSON Remote Procedure Call) is a lightweight, stateless protocol that allows a client to call methods on a remote server and receive responses. It's essentially a standardized way to say "run this function with these parameters" across a network or between processes.

The "2.0" refers to the specification version, which defines exactly how requests and responses should be formatted. This standardization ensures that any JSON-RPC 2.0 client can communicate with any JSON-RPC 2.0 server, regardless of what programming language they're built with.

The Basic Structure

Every JSON-RPC request must include four elements:

// JSON-RPC 2.0 Request Structure

{

"jsonrpc": "2.0",

"method": "search_hotels",

"params": {

"destination": "Costa Rica",

"checkin": "2024-12-15",

"checkout": "2024-12-22",

"guests": 2

},

"id": 1

}

// JSON-RPC 2.0 Response Structure (Success)

{

"jsonrpc": "2.0",

"result": {

"hotels": [

{

"id": "hotel_123",

"name": "Rainforest Lodge",

"price_per_night": 150,

"location": "Manuel Antonio",

"rating": 4.5

},

{

"id": "hotel_456",

"name": "Beach Resort",

"price_per_night": 200,

"location": "Guanacaste",

"rating": 4.2

}

]

},

"id": 1

}

// JSON-RPC 2.0 Response Structure (Error)

{

"jsonrpc": "2.0",

"error": {

"code": -32602,

"message": "Invalid params",

"data": "Missing required parameter: destination"

},

"id": 1

}Transport Mechanisms

JSON-RPC 2.0 is transport-agnostic, meaning the protocol doesn't dictate how messages are delivered. MCP supports two primary transport methods:

Standard I/O (stdio): Perfect for local development and testing. The MCP server runs as a subprocess, and messages are exchanged through standard input/output pipes. This is lightweight, secure, and ideal for IDEs like Cursor or VS Code extensions.

HTTP: Used when the MCP server is hosted remotely or needs to serve multiple clients simultaneously. Messages are sent as HTTP POST requests, making it easy to deploy MCP servers as web services.

MCP-Specific Conventions

While MCP uses standard JSON-RPC 2.0, it defines specific method names and parameter structures:

Discovery Methods:

tools/list- Get available toolsresources/list- Get available resourcesprompts/list- Get available prompts

Action Methods:

tools/call- Execute a specific toolresources/read- Retrieve resource contentprompts/get- Get a specific prompt

Notification Methods (no response expected):

notifications/initialized- Client signals it's readynotifications/progress- Server reports progress updates

Error Handling

JSON-RPC 2.0 defines standard error codes that MCP extends:

-32700Parse error (invalid JSON)-32600Invalid request (malformed JSON-RPC)-32601Method not found-32602Invalid params-32603Internal error-32000 to -32099Server-defined errors (MCP uses these for domain-specific issues)

This standardized approach means client applications can handle errors consistently across different MCP servers.

The beauty of this protocol choice is that it abstracts away all the complexity we saw in our API chaos examples. Instead of learning different authentication schemes, parameter formats, and response structures for each service, developers work with one consistent JSON-RPC interface while MCP servers handle the underlying API complexity behind the scenes.

The Ecosystem Effect

The real power of MCP emerges when it becomes widespread. Imagine a world where:

Every major travel platform provides an MCP server

Tourism boards offer MCP servers for their destinations

AI development tools automatically discover and connect to travel MCP servers

Building a holiday planning agent is as simple as configuring which destinations and services you want to include

Your agent can seamlessly work with any new tourism service that supports MCP

We're moving toward a future where AI agents can plan and coordinate complex holiday experiences as easily as humans browse travel websites—and MCP is the standard that makes it possible.

What's Next?

Now that you understand the foundational concepts of MCP, you're ready to dive into the technical implementation. In the next article, we'll explore how to build your own MCP server, create custom tools, and integrate everything into a working AI agent system (basically into our Angular project).

The beauty of MCP is that once you understand these core concepts, the implementation becomes a matter of following the specification—and that's where the real fun begins.

Thanks for reading so far 🙏

I’d like to have your feedback, so please leave a comment, clap or follow. 👏

Spread the Angular love! 💜

If you liked it, share it among your community, tech bros and whoever you want! 🚀👥

Don’t forget to follow me and stay updated: 📱

Thanks for being part of this Angular journey! 👋😁